Racing, Regulating, and Reckoning with Transformative AI

Last year we published a round-up of articles framing the biggest AI policy debates in 2024. Here is my rear-view reflection for 2025 – who said what, why it mattered, and what it may mean for the year ahead.

America First

1. Trump’s AI Action Plan seeks customers, not partners – Alex Krasodomski (Chatham House)

The Trump Administration released its AI Action Plan in July. It signalled Washington’s intention to ‘win the AI race’ by minimising red tape, building AI infrastructure, and driving global adoption of American AI technology.

Krasodomski unpacked the AI Action Plan and its implications for US allies, describing the overall goal as an effort to tie partners into ‘dependent tech ecosystems’ based on US AI.

This comes as the global rules-based order seems to be falling apart, as decried by Canadian Prime Minister Carney in his speech in Davos a few weeks ago. For middle powers in 2026, a key question will be how much influence they can exert over the AI supply chain, in a world increasingly bifurcated between US and Chinese ecosystems.

On AI Policy Bulletin: Middle Powers Can Gain AI Influence Without Building the Next ChatGPT

2. Don’t Overthink the AI Stack – Dean Ball

Alongside the AI Action Plan, President Trump also signed an executive order on “Promoting the Export of the American AI Technology Stack.” But what does the ‘AI stack’ actually mean?

Dean Ball spent a chunk of 2025 as Senior Policy Advisor for AI and Emerging Technology in the White House – and was primary staff author of this executive order.

As Ball explained, the intention of the AI Export Plan was for US development finance to drive investment in foreign data centers. To ensure this advances America’s strategic interests, recipients of US financing must use American tech across the ‘full stack’ of AI infrastructure – meaning chips, data, AI models and applications, and cybersecurity measures.

Ball’s piece came as the US government sought feedback from industry and partner governments and stumbled with the initial implementation of its AI Export Plan.

Read AIPB’s profile on Dean Ball here: Dean Ball Joins the Trump Administration as Senior Policy Advisor for AI & Emerging Tech

3. What is California’s AI safety law? – Malihe Alikhani and Aidan T. Kane (Brookings)

In September, California Governor Gavin Newsom signed SB 53, the first US state law specifically targeting frontier AI models. The law established a ‘trust but verify’ framework requiring frontier developers to publish safety protocols and report serious incidents.

As Alikhani and Kane explained, SB 53 showed how California was filling the governance vacuum as Congress remained deadlocked on comprehensive AI legislation.

This trend continued: following California’s lead, New York enacted the RAISE Act in December, requiring large AI developers to report safety incidents. And last week California’s SB 813, a bill that would establish expert panels to set voluntary AI safety standards, passed the state Senate.

The backdrop to the wave of state legislation is President Trump’s executive order in December, threatening to withhold federal funding from states with ‘conflicting’ regulations. The federal-state battle over who can govern AI is set to continue in the months ahead.

More on this: What the UK Can Learn from California’s Frontier AI Regulation Battle

From Brussels to Beijing

4. AI Safety under the EU AI Code of Practice – A New Global Standard? – Mia Hoffmann (CSET)

While the US federal government has been loosening AI regulations, the EU has been moving in the opposite direction.

The EU passed its AI Act in 2024, imposing obligations on any company offering AI products or services in the European market. In July 2025, the European Commission followed up with a Code of Practice to help providers of general-purpose AI models comply with the Act.

Hoffmann looked at the safety and security chapter of the Code – which will have the most bearing on frontier AI companies such as OpenAI, Anthropic, Google, and Meta. The voluntary Code sets out risk management processes that go significantly beyond what these companies are currently doing.

Most of the EU AI Act is set to be enforced in August 2026. But keep an eye on the European Commission’s proposal to delay some of the Act’s most significant requirements on high-risk AI systems – as Europe grapples with whether and how to compete with the US and China on frontier AI.

Read more: It’s Too Hard for Small and Medium-Sized Businesses to Comply With the EU AI Act: Here’s What to Do

5. China’s AI Policy at the Crossroads: Balancing Development and Control in the DeepSeek Era – Scott Singer and Matt Sheehan (Carnegie Endowment)

Since DeepSeek burst on the scene in January 2025, Chinese policymakers have been busy. Among other things, they announced measures mandating the labelling of synthetic content; launched a three-month campaign cracking down on illegal AI applications; and released China’s Global AI Governance Action Plan.

Singer and Sheehan showed how China’s AI policy since 2017 has cycled between heavy regulation and lighter touch, depending on how confident Beijing felt about China’s tech capabilities and economic growth. While DeepSeek’s success gave a boost to confidence, it came while the economy remained weak – placing policymakers in a difficult position.

The takeaway is that Beijing must choose between a return to tighter state control, or the kind of flexibility that enabled DeepSeek to emerge in the first place. Early signs suggest the voices for state control are winning, but Chinese innovation may surprise the skeptics again – perhaps this time with a push into robotic AI.

Reading the tea leaves

6. Long timelines to advanced AI have gotten crazy short – Helen Toner

AI policy expert and former OpenAI board member Helen Toner pointed out that views among AI experts had shifted regarding timelines to transformative AI. By ‘transformative AI’, think: AI that can outperform humans at virtually all tasks.

As recently as five years ago, there was plenty of skepticism that artificial general intelligence (AGI) would arrive in our lifetimes. Now, many AI experts and industry leaders think it will happen in the next few years. And even the more skeptical experts have generally revised down their forecasts to within the next decade or two.

This narrative shift was visible in 2025 policy discussions. US Senator Mike Rounds introduced legislation requiring the Pentagon to establish an AGI Steering Committee. Ben Buchanan, former Biden White House AI adviser, said he thought we’d see “extraordinarily capable AI systems... quite likely during Donald Trump’s presidency.”

The AGI discourse appears to be strengthening into 2026 – last week the UK House of Lords debated what the UK should do about superintelligent AI.

7. AI 2027 – Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, and Romeo Dean

A group of AI forecasters released a research paper predicting what the next few years of AI capability growth might look like. The paper quickly went viral, attracting readers as senior as US Vice President JD Vance.

While the paper sparked plenty of disagreement, it was taken seriously by many – not least because of the impressive forecasting track record of some of its authors.

The scenario they painted was one where AI companies use their own models to accelerate the pace of AI research and development, leading to an ‘intelligence explosion’ around 2027. This is followed by sharp US-China competition, critical decisions about whether to race ahead in capabilities or proceed more cautiously, and superintelligent AI before the end of the decade.

The authors have since pushed back their timelines for transformative AI by a few years, and AI timelines and takeoffs remain hotly debated. 2026 should provide plenty of data for whose predictions are most on track.

Daniel Kokotajlo also co-wrote a piece for AI Policy Bulletin: We Should Not Allow Powerful AI to Be Trained in Secret: The Case for Increased Public Transparency

8. AI as Normal Technology – Arvind Narayanan and Sayash Kapoor

Published just weeks after AI 2027, this piece offered a major counter-narrative to forecasts of imminent superintelligence.

Princeton’s Narayanan and Kapoor argued that AI will follow historical patterns of transformative technologies like electricity and the internet. This will still be hugely impactful, but ‘normal’ in the sense that change will be gradual, humans will retain control, and AI will augment rather than replace human abilities.

Predictably, the piece prompted fierce debate, with critics arguing it underestimated the potential for AI self-improvement, while supporters saw it as essential grounding for realistic AI governance.

Strategizing superintelligence

9. Superintelligence Strategy – Dan Hendrycks, Eric Schmidt, Alexandr Wang

Former Google CEO Schmidt, Scale AI founder Wang, and Center for AI Safety Director Hendrycks proposed this framework for navigating the race to superintelligent AI.

In a nod to nuclear deterrence, they termed their proposal Mutual Assured AI Malfunction or MAIM – a deterrence regime where any country’s aggressive bid for AI dominance would be met with sabotage by rivals.

The paper challenged the idea of a Manhattan Project for AI (a recommendation by the US-China Economic Security Review Commission), warning that a race could trigger catastrophic escalation, and sparked debate in AI governance circles.

Peter Wildeford and Oscar Delaney contributed to this discussion on AI Policy Bulletin, arguing that MAIM lacks the characteristics that made nuclear deterrence work.

Measuring what’s happening

10. UK AISI Frontier AI Trends Report

The UK’s AI Security Institute published its first public assessment of AI capabilities. As the UK Prime Minister’s AI Advisor Jade Leung said, this constituted “the most robust public evidence from a government body so far of how quickly frontier AI is advancing.”

The report arrived as UK AISI was designated Network Coordinator for the International Network of AI Safety Institutes.

Among other things, the UK AISI found AI making rapid progress in cybersecurity capabilities. Two years ago, AI models could barely complete tasks requiring basic cyber skills; by late 2025, some models could handle expert-level work requiring a decade of human experience.

More worryingly, the length of tasks that models can complete unassisted is doubling roughly every eight months – a trend backed by widely cited research from the nonprofit METR. As of publication, METR reports that leading models can complete software engineering tasks that would take humans over 5 hours.

Bonus article: Popping the bubble?

11. OpenAI, Nvidia Fuel $1 Trillion AI Market With Web of Circular Deals – Bloomberg

Amid all the AI hype, 2025 also saw a torrent of commentary that the AI economy was actually a bubble. Surprisingly, plenty of tech CEOs contributed to this, including Sam Altman, Mark Zuckerberg, and Jeff Bezos.

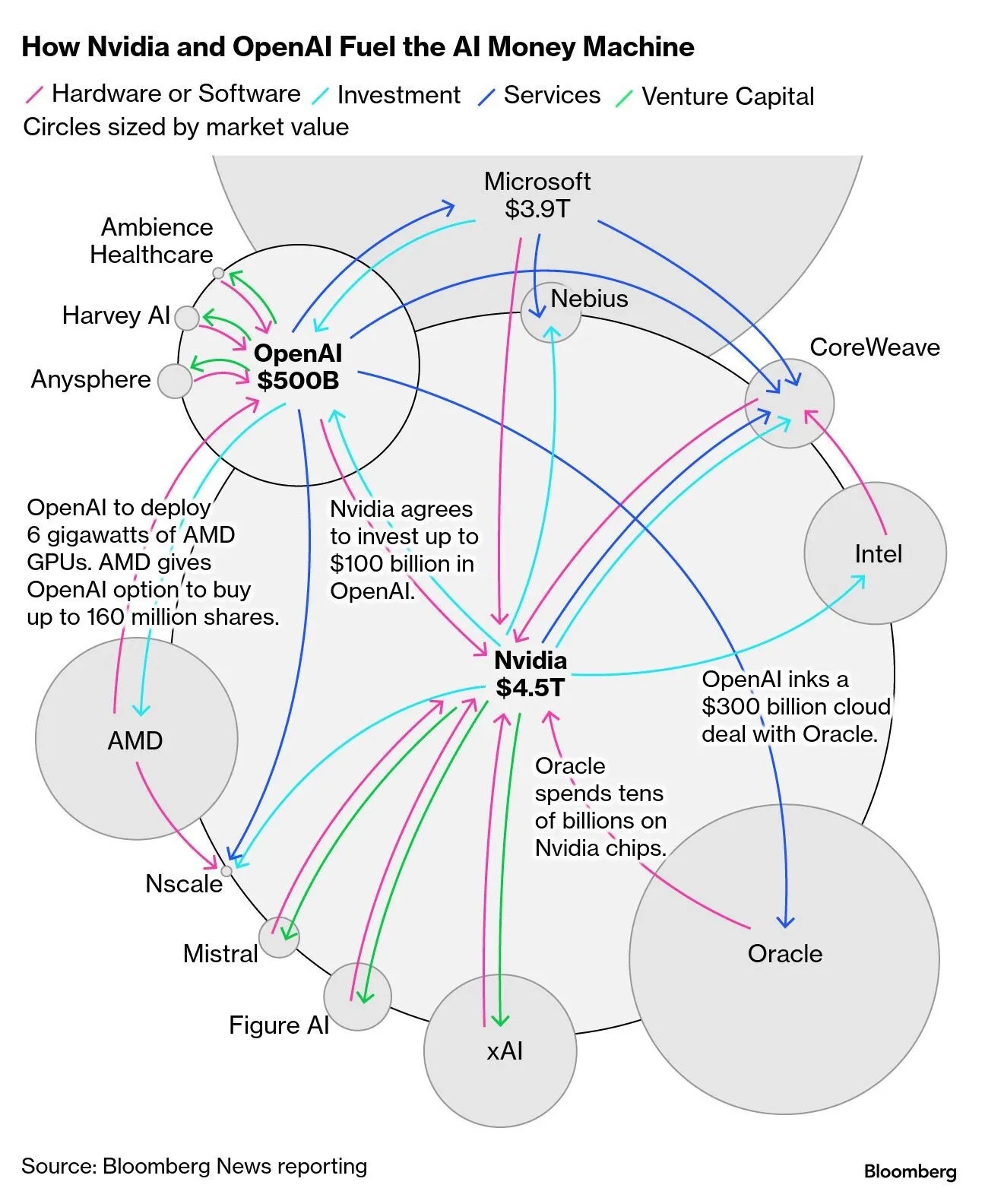

Epitomising the bubble narrative was this diagram published by Bloomberg, showing the circular financing arrangements between a web of tech companies, including Nvidia, OpenAI, Oracle and AMD.

Whether or not we’re headed to an AI economic crash remains to be seen – but will have big consequences for AI governance.

Beyond the economic fallout (likely severe), a burst bubble might give policymakers more time to prepare for transformative technology, if it slows down AI development and deployment. Conversely, we might see an AI crash and transformative AI in the next few years – they need not be mutually exclusive.

A reminder that AI Policy Bulletin is scaling up. If you're a researcher with an idea you’d like to communicate, pitch to us here. If you work in policy, let us know what topics you’d like us to cover.

.png)